- There are Many Operating Systems those have be Developed for Performing the Operations those are requested by the user. There are Many Operating Systems which have the Capability to Perform the Requests those are received from the System. The Operating system can perform a Single Operation and also Multiple Operations at a Time. So there are many types of Operating systems those are organized by using their Working Techniques.

Serial Processing:

The Serial Processing Operating Systems are those which Performs all the instructions into a Sequence Manner or the Instructions those are given by the user will be executed by using the FIFO Manner means First in First Out. All the Instructions those are Entered First in the System will be Executed First and the Instructions those are Entered Later Will be Executed Later. For Running the Instructions the Program Counter is used which is used for Executing all the Instructions.

In this the Program Counter will determines which instruction is going to Execute and the which instruction will be Execute after this. Mainly the Punch Cards are used for this. In this all the Jobs are firstly Prepared and Stored on the Card and after that card will be entered in the System and after that all the Instructions will be executed one by One. But the Main Problem is that a user doesn’t interact with the System while he is working on the System, means the user can’t be able to enter the data for Execution.

Batch Processing

The Batch Processing is same as the Serial Processing Technique. But in the Batch Processing Similar Types of jobs are Firstly Prepared and they are Stored on the Card. and that card will be Submit to the System for the Processing. The System then Perform all the Operations on the Instructions one by one. And a user can’t be Able to specify any input. And Operating System wills increments his Program Counter for Executing the Next Instruction.

The Main Problem is that the Jobs those are prepared for Execution must be the Same Type and if a job requires for any type of Input then this will not be Possible for the user. And Many Time will be wasted for Preparing the Batch. The Batch Contains the Jobs and all those jobs will be executed without the user Intervention. And Operating System will use the LOAD and RUN Operation. This will first LOAD the Job from the Card and after that he will execute the instructions. By using the RUN Command.

The Speed of the Processing the Job will be Depend on the Jobs and the Results those are produced by the System in difference of Time which is used for giving or submit the Job and the Time which is used for Displaying the Results on the Screen.

Multiprocessing

Generally a Computer has a Single Processor means a Computer have a just one CPU for Processing the instructions. But if we are Running multiple jobs, then this will decrease the Speed of CPU. For Increasing the Speed of Processing then we uses the Multiprocessing, in the Multi Processing there are two or More CPU in a Single Operating System if one CPU will fail, then other CPU is used for providing backup to the first CPU. With the help of Multi-processing, we can Execute Many Jobs at a Time. All the Operations are divided into the Number of CPU’s. if first CPU Completed his Work before the Second CPU, then the Work of Second CPU will be divided into the First and Second.

Multi-Programming

As we know that in the Batch Processing System there are multiple jobs Execute by the System. The System first prepare a batch and after that he will Execute all the jobs those are Stored into the Batch. But the Main Problem is that if a process or job requires an Input and Output Operation, then it is not possible and second there will be the wastage of the Time when we are preparing the batch and the CPU will remain idle at that Time.

But With the help of Multi programming we can Execute Multiple Programs on the System at a Time and in the Multi-programming the CPU will never get idle, because with the help of Multi-Programming we can Execute Many Programs on the System and When we are Working with the Program then we can also Submit the Second or Another Program for Running and the CPU will then Execute the Second Program after the completion of the First Program. And in this we can also specify our Input means a user can also interact with the System.

The Multi-programming Operating Systems never use any cards because the Process is entered on the Spot by the user. But the Operating System also uses the Process of Allocation and De-allocation of the Memory Means he will provide the Memory Space to all the Running and all the Waiting Processes. There must be the Proper Management of all the Running Jobs.

Real Time System

There is also an Operating System which is known as Real Time Processing System. In this Response Time is already fixed. Means time to Display the Results after Possessing has fixed by the Processor or CPU. Real Time System is used at those Places in which we Requires higher and Timely Response. These Types of Systems are used in Reservation. So when we specify the Request, the CPU will perform at that Time. There are two Types of Real Time System.

Hard Real Time System:

In the Hard Real Time System, Time is fixed and we can’t Change any Moments of the Time of Processing. Means CPU will Process the data as we Enters the Data.

Soft Real Time System

In the Soft Real Time System, some Moments can be Change. Means after giving the Command to the CPU, CPU Performs the Operation after a Microsecond.

Clustered System

Like parallel systems, clustered systems gather together multiple CPUs to accomplish computational work.

Clustered systems differ from parallel systems, however, in that they are composed of two or more individual systems coupled together.

The definition of the term clustered is not concrete; the general accepted definition is that clustered computers share storage and are closely linked via LAN networking.

Clustering is usually performed to provide high availability.

A layer of cluster software runs on the cluster nodes. Each node can monitor one or more of the others. If the monitored machine fails, the monitoring machine can take ownership of its storage, and restart the application(s) that were running on the failed machine. The failed machine can remain down, but the users and clients of the application would only see a brief interruption of service.

Parallel Clustering

Parallel clusters allow multiple hosts to access the same data on the shared storage. Because most operating systems lack support for this simultaneous data access by multiple hosts, parallel clusters are usually accomplished by special versions of software and special releases of applications.

Asymmetric Clustering

In this, one machine is in hot standby mode while the other is running the applications. The hot standby host (machine) does nothing but monitor the active server. If that server fails, the hot standby host becomes the active server.

Symmetric Clustering

In this, two or more hosts are running applications, and they are monitoring each other. This mode is obviously more efficient, as it uses all of the available hardware.

Parallel operating systems

Parallel operating systems are used to interface multiple networked computers to complete tasks in parallel. The architecture of the software is often a UNIX-based platform, which allows it to coordinate distributed loads between multiple computers in a network. Parallel operating systems are able to use software to manage all of the different resources of the computers running in parallel, such as memory, caches, storage space, and processing power. Parallel operating systems also allow a user to directly interface with all of the computers in the network.

A parallel operating system works by dividing sets of calculations into smaller parts and distributing them between the machines on a network. To facilitate communication between the processor cores and memory arrays, routing software has to either share its memory by assigning the same address space to all of the networked computers, or distribute its memory by assigning a different address space to each processing core.

Sharing memory allows the operatingsystem to run very quickly, but it is usually not as powerful. When using distributed shared memory, processors have access to both their own local memory and the memory of other processors; this distribution may slow the operatingsystem, but it is often more flexible and efficient.

Distributed Operating System

Distributed Means Data is Stored and Processed on Multiple Locations. When a Data is stored on to the Multiple Computers, those are placed in Different Locations. Distributed means In the Network, Network Collections of Computers are connected with Each other.

Then if we want to Take Some Data From other Computer, Then we uses the Distributed Processing System. And we can also Insert and Remove the Data from out Location to another Location. In this Data is shared between many users. And we can also Access all the Input and Output Devices are also accessed by Multiple Users.

SIMPLE BATCH SYSTEMS

- In this type of system, there is no direct interaction between user and the computer.

- The user has to submit a job (written on cards or tape) to a computer operator.

- Then computer operator places a batch of several jobs on an input device.

- Jobs are batched together by type of languages and requirement.

- Then a special program, the monitor, manages the execution of each program in the batch.

- The monitor is always in the main memory and available for execution.

Following are some disadvantages of this type of system :

- No interaction between user and computer.

- No mechanism to prioritise the processes.

MULTI-PROGRAMMING BATCH SYSTEMS

- In this the operating system picks up and begins to execute one of the jobs from memory.

- Once this job needs an I/O operation operating system switches to another job (CPU and OS always busy).

- Jobs in the memory are always less than the number of jobs on disk(Job Pool).

- If several jobs are ready to run at the same time, then the system chooses which one to run through the process of CPU Scheduling.

- In Non-multiprogrammed system, there are moments when CPU sits idle and does not do any work.

- In Multiprogramming system, CPU will never be idle and keeps on processing.

Time-Sharing Systems are very similar to Multiprogramming batch systems. In fact time sharing systems are an extension of multiprogramming systems.

In time sharing systems the prime focus is on minimizing the response time, while in multiprogramming the prime focus is to maximize the CPU usage.

MULTIPROCESSOR SYSTEMS

A multiprocessor system consists of several processors that share a common physical memory. Multiprocessor system provides higher computing power and speed. In multiprocessor system all processors operate under single operating system. Multiplicity of the processors and how they do act together are transparent to the others.

Following are some advantages of this type of system.

- Enhanced performance

- Execution of several tasks by different processors concurrently, increases the system's throughput without speeding up the execution of a single task.

- If possible, system divides task into many subtasks and then these subtasks can be executed in parallel in different processors. Thereby speeding up the execution of single tasks.

DESKTOP SYSTEMS

Earlier, CPUs and PCs lacked the features needed to protect an operating system from user programs. PC operating systems therefore were neither multiuser nor multitasking. However, the goals of these operating systems have changed with time; instead of maximizing CPU and peripheral utilization, the systems opt for maximizing user convenience and responsiveness. These systems are called Desktop Systems and include PCs running

Microsoft Windows and the Apple Macintosh. Operating systems for these computers have benefited in several ways from the development of operating systems for mainframes.

Microcomputers were immediately able to adopt some of the technology developed for larger operating systems. On the other hand, the hardware costs for microcomputers are sufficiently low that individuals have sole use of the computer, and CPU utilization is no longer a prime concern. Thus, some of the design decisions made in operating systems for mainframes may not be appropriate for smaller systems.

DISTRIBUTED OPERATING SYSTEMS

The motivation behind developing distributed operating systems is the availability of powerful and inexpensive microprocessors and advances in communication technology.

These advancements in technology have made it possible to design and develop distributed systems comprising of many computers that are inter connected by communication networks. The main benefit of distributed systems is its low price/performance ratio.

Following are some advantages of this type of system.

- As there are multiple systems involved, user at one site can utilize the resources of systems at other sites for resource-intensive tasks.

- Fast processing.

- Less load on the Host Machine.

The two types of Distributed Operating Systems are:

Client-Server Systems and Peer-to-Peer Systems.Client-Server Systems

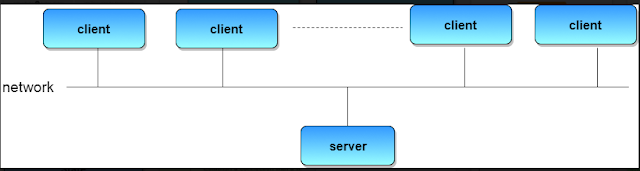

Centralized systems today act as server systems to satisfy requests generated by client systems. The general structure of a client-server system is depicted in the figure below:

Server Systems can be broadly categorized as compute servers and file servers.

- Compute-server systems provide an interface to which clients can send requests to perform an action, in response to which they execute the action and send back results to the client.

- File-server systems provide a file-system interface where clients can create, update, read, and delete files.

Peer-to-Peer Systems

The growth of computer networks - especially the Internet and World Wide Web (WWW) – has had a profound influence on the recent development of operating systems. When PCs were introduced in the 1970s, they were designed for personal use and were generally considered standalone computers. With the beginning of widespread public use of the Internet in the 1980s for electronic mail and ftp many PCs became connected to computer networks.

In contrast to the tightly coupled systems, the computer networks used in these applications consist of a collection of processors that do not share memory or a clock. Instead, each processor has its own local memory. The processors communicate with one another through various communication lines, such as high-speed buses or telephone lines. These systems are usually referred to as loosely coupled systems ( or distributed systems). The general structure of a client-server system is depicted in the figure below:

CLUSTERED SYSTEMS

- Like parallel systems, clustered systems gather together multiple CPUs to accomplish computational work.

- Clustered systems differ from parallel systems, however, in that they are composed of two or more individual systems coupled together.

- The definition of the term clustered is not concrete; the general accepted definition is that clustered computers share storage and are closely linked via LAN networking.

- Clustering is usually performed to provide high availability.

- A layer of cluster software runs on the cluster nodes. Each node can monitor one or more of the others. If the monitored machine fails, the monitoring machine can take ownership of its storage, and restart the application(s) that were running on the failed machine. The failed machine can remain down, but the users and clients of the application would only see a brief interruption of service.

- Asymmetric Clustering - In this, one machine is in hot standby mode while the other is running the applications. The hot standby host (machine) does nothing but monitor the active server. If that server fails, the hot standby host becomes the active server.

- Symmetric Clustering - In this, two or more hosts are running applications, and they are monitoring each other. This mode is obviously more efficient, as it uses all of the available hardware.

- Parallel Clustering - Parallel clusters allow multiple hosts to access the same data on the shared storage. Because most operating systems lack support for this simultaneous data access by multiple hosts, parallel clusters are usually accomplished by special versions of software and special releases of applications.

Clustered technology is rapidly changing. Clustered system use and features should expand greatly as Storage Area Networks(SANs). SANs allow easy attachment of multiple hosts to multiple storage units. Current clusters are usually limited to two or four hosts due to the complexity of connecting the hosts to shared storage.

REAL-TIME OPERATING SYSTEM

It is defined as an operating system known to give maximum time for each of the critical operations that it performs, like OS calls and interrupt handling.

The Real-Time Operating system which guarantees the maximum time for critical operations and complete them on time are referred to as Hard Real-Time Operating Systems.

While the real-time operating systems that can only guarantee a maximum of the time, i.e. the critical task will get priority over other tasks, but no assurity of completeing it in a defined time. These systems are referred to as Soft Real-Time Operating Systems.

HANDHELD SYSTEMS

Handheld systems include Personal Digital Assistants(PDAs), such as

Palm-Pilots or Cellular Telephones with connectivity to a network such as the Internet. They are usually of limited size due to which most handheld devices have a small amount of memory, include slow processors, and feature small display screens.- Many handheld devices have between 512 KB and 8 MB of memory. As a result, the operating system and applications must manage memory efficiently. This includes returning all allocated memory back to the memory manager once the memory is no longer being used.

- Currently, many handheld devices do not use virtual memory techniques, thus forcing program developers to work within the confines of limited physical memory.

- Processors for most handheld devices often run at a fraction of the speed of a processor in a PC. Faster processors require more power. To include a faster processor in a handheld device would require a larger battery that would have to be replaced more frequently.

- The last issue confronting program designers for handheld devices is the small display screens typically available. One approach for displaying the content in web pages is web clipping, where only a small subset of a web page is delivered and displayed on the handheld device.

Some handheld devices may use wireless technology such as BlueTooth, allowing remote access to e-mail and web browsing. Cellular telephones with connectivity to the Internet fall into this category. Their use continues to expand as network connections become more available and other options such as

cameras and MP3 players, expand their utility.

0 Comments: